Snowflake: Schedule, Trigger, Monitor, and Orchestrate Operations

Disclaimer

Your use of this download is governed by Stonebranch’s Terms of Use, which are available at https://www.stonebranch.com/integration-hub/Terms-and-Privacy/Terms-of-Use/

Overview

This Universal Task allows Stonebranch users to orchestrate, schedule, trigger, and monitor the Snowflake load and unload process from different data sources (cloud storage or local VM’s) directly from Universal Controller. It uses Python libraries to perform all functions listed in the following sections. Alternatively, you also can perform all these operations using the snowflake JDBC driver which you can add to the Universal Controller libraries, and use SQL Task to perform any operations with Snowflake (https://docs.snowflake.com/en/user-guide/jdbc-download.html)

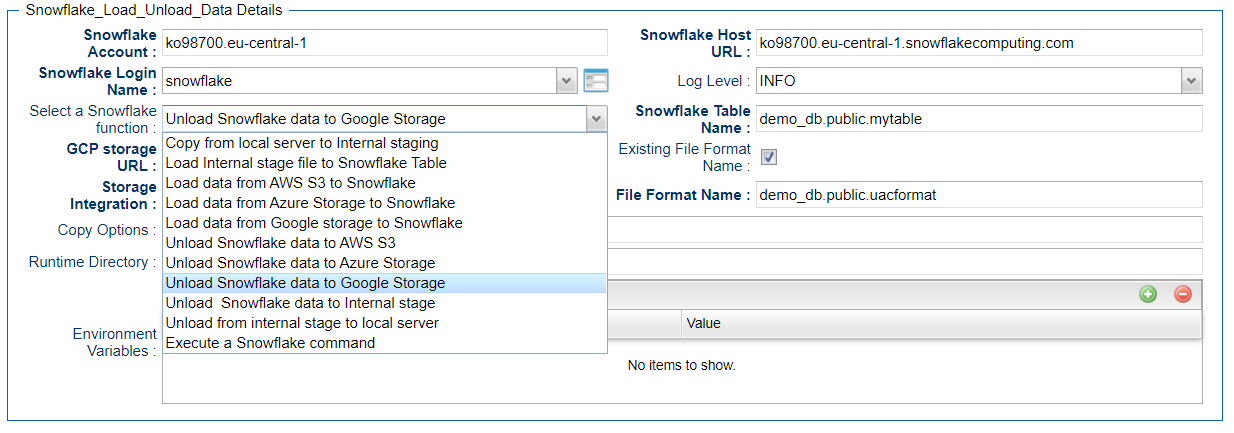

Users can orchestrate the Snowflake functionalities using the following features available in the Universal Task

UAC functionalities (Snowflake -Loading)

Load data from AWS S3 to Snowflake.

Load data from Azure Storage to Snowflake.

Load data from Google storage to Snowflake.

Load Internal stage file to Snowflake Table.

Copy from local server to Internal staging.

UAC functionalities (Snowflake - Unloading)

Unload Snowflake data to AWS S3.

Unload Snowflake data to Azure Storage.

Unload Snowflake data to Google Storage.

Unload Snowflake data to Internal stage.

Unload from internal stage to local server.

UAC functionalities (Snowflake – Execute Commands)

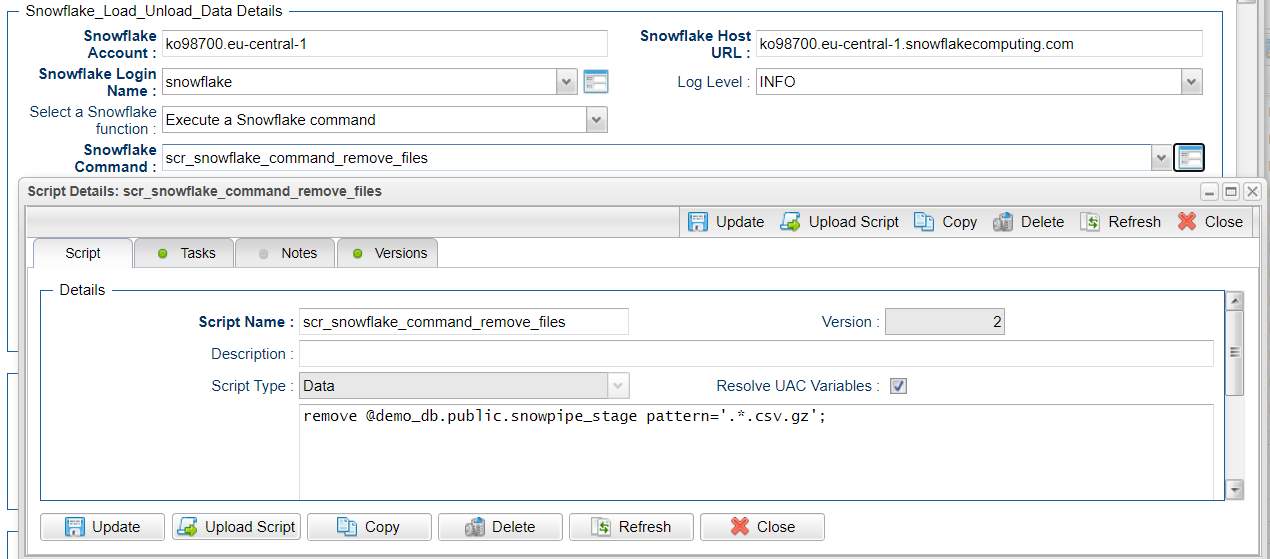

Execute a Snowflake command.

Software Requirements

This integration requires an Universal Agent and a Python runtime to execute the Universal Task against Snowflake.

Software Requirements for Universal Template and Universal Task

Requires Python 3.6 or higher. Tested with the Universal Agent bundled Python distribution.

Python modules required:

- https://raw.githubusercontent.com/snowflakedb/snowflake-connector-python/v2.3.9/tested_requirements/requirements_36.reqs

- snowflake-connector-python

- snowflake-ingest

- Please refer to Snowflake URL : https://docs.snowflake.com/en/user-guide/python-connector-install.html for the latest Python connector details.

Software Requirements for Universal Agent

Either:

Universal Agent for Windows x64 Version 7.0 and later with Python options installed.

Universal Agent for Linux Version 7.0 and later with Python options installed.

Software Requirements for Universal Controller

Universal Controller Version 7.0.0.0 and later.

Software Requirements for the Application to be Scheduled

This Universal Task has been tested with the snowflake-connector-python=2.3.9 and snowflake-ingest=1.0.3.

Technical Considerations

This task uses Python modules snowflake-connector-python and snowflake-ingest to make REST-API calls to Snowflake, Additionally, as a prerequisite, users might need to install other packages listed in https://raw.githubusercontent.com/snowflakedb/snowflake-connector-python/v2.3.9/tested_requirements/requirements_36.reqs.

Snowflake login credential, Snowflake Account name, and URL would be needed in UAC for this Universal Task.

In the case of data ingest from internal staging to Snowflake table through a pipeline, you would need to supply a private key file from local server and public key for the same to be loaded against the Snowflake user used in Universal Task.

Use the Snowflake instructions is in URL : https://docs.snowflake.com/en/user-guide/key-pair-auth.html for Key pair authentication and rotation process.

Key Features

Feature | Description |

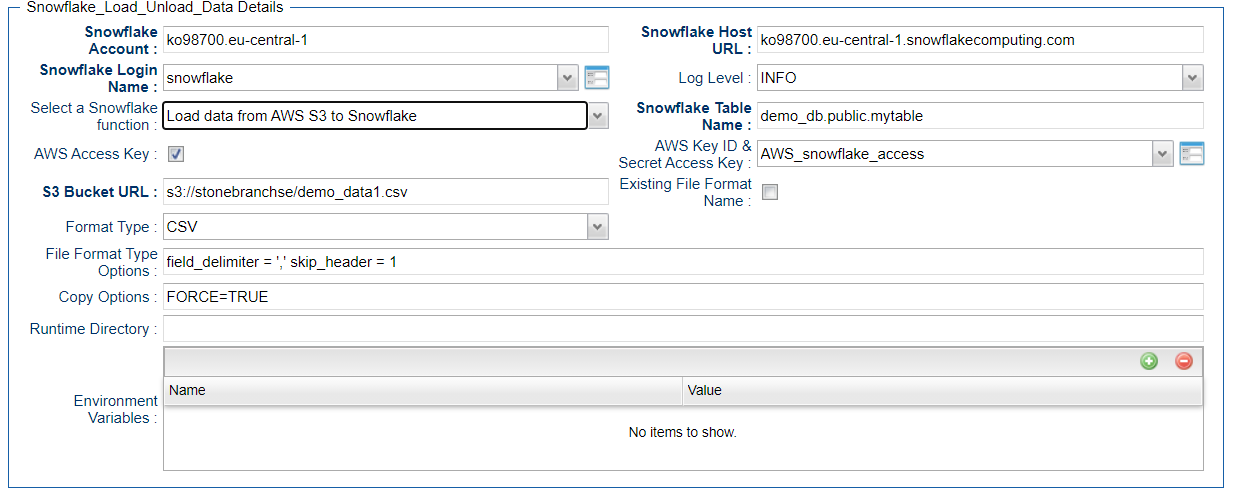

Load data from AWS S3 to Snowflake | Load the S3 bucket file(s) into a Snowflake table, You may specify the file format options and copy options appropriately. |

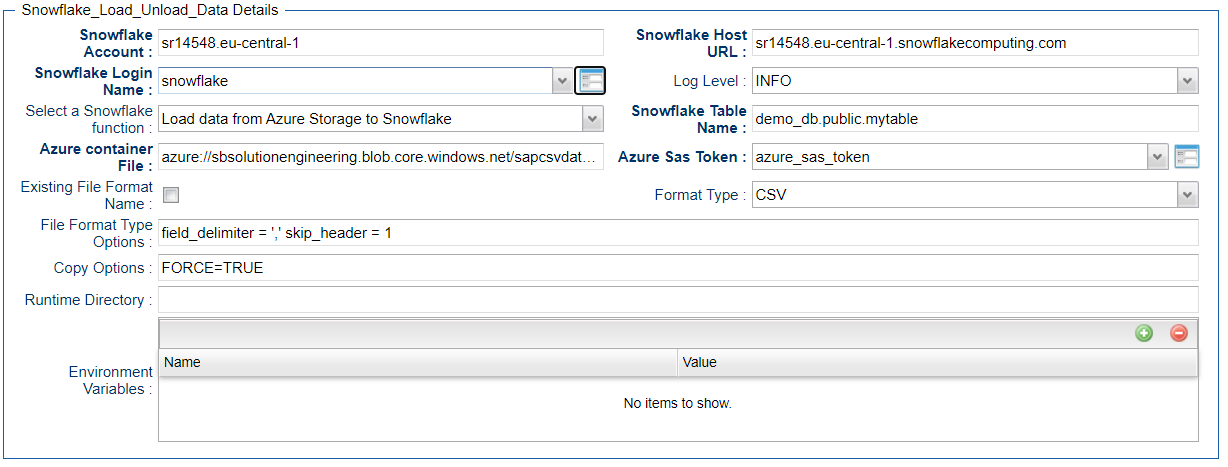

Load data from Azure Storage to Snowflake | Load the Azure container blobs into a Snowflake table, You may specify the file format options and copy options appropriately. |

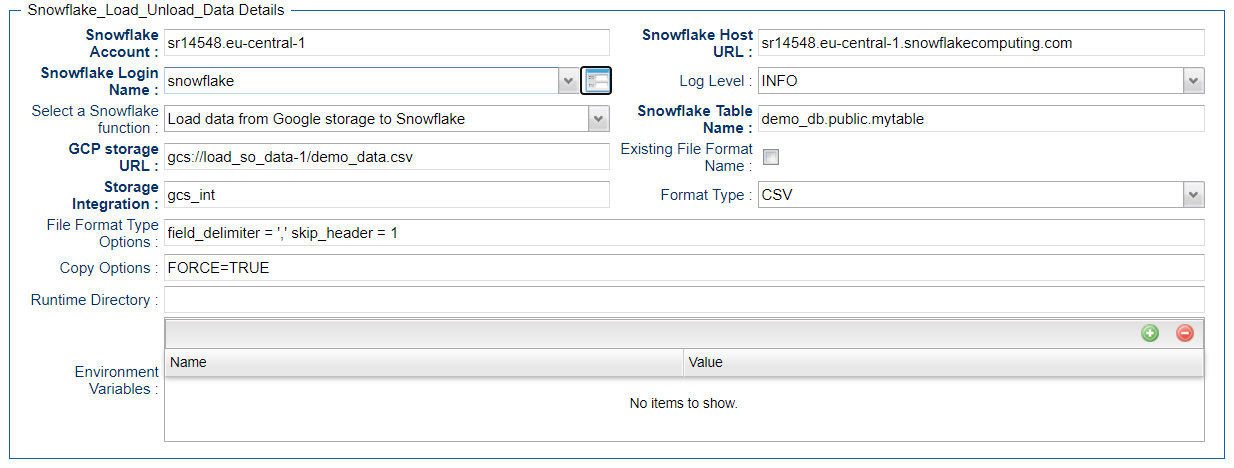

Load data from Google storage to Snowflake | Load the google storage bucket files into a Snowflake table, You may specify the file format options and copy options appropriately. Please note that you will need to define the storage integration in Snowflake and provide this in the Universal Task. |

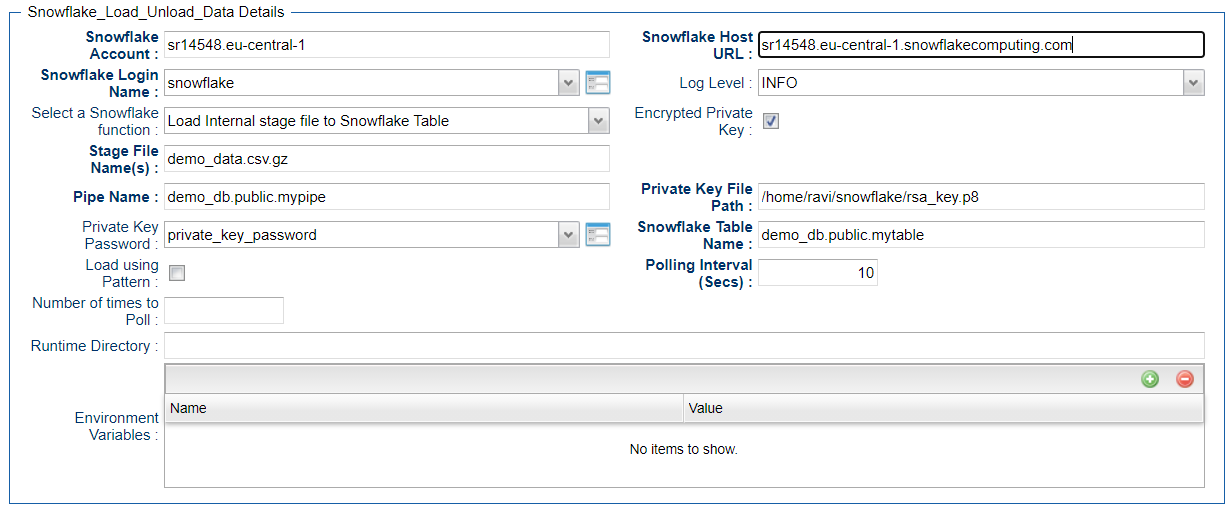

Load Internal stage file to Snowflake Table | Files that are available in Snowflake internal storage to be loaded into a Snowflake table using the pipe name and authentication using private public key files. The data can be loaded in Snowflake using patterns. |

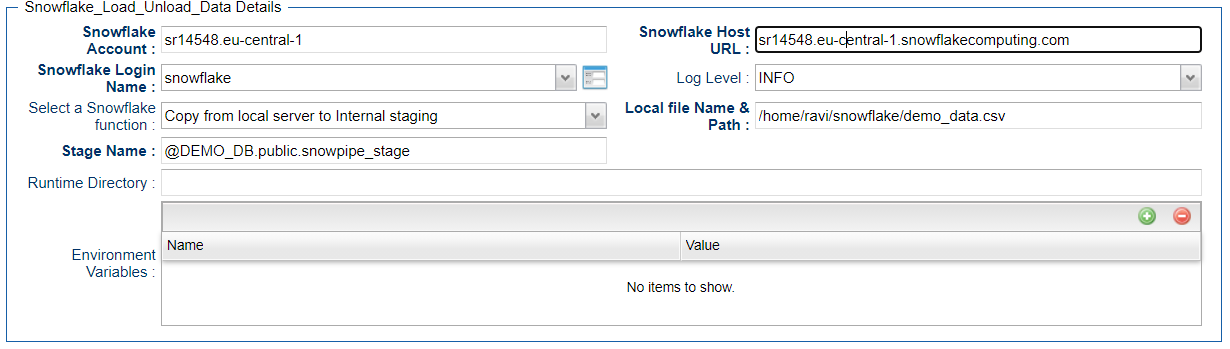

Copy from local server to Internal staging | Copies files from local Windows or Linux server to Snowflake internal staging area. |

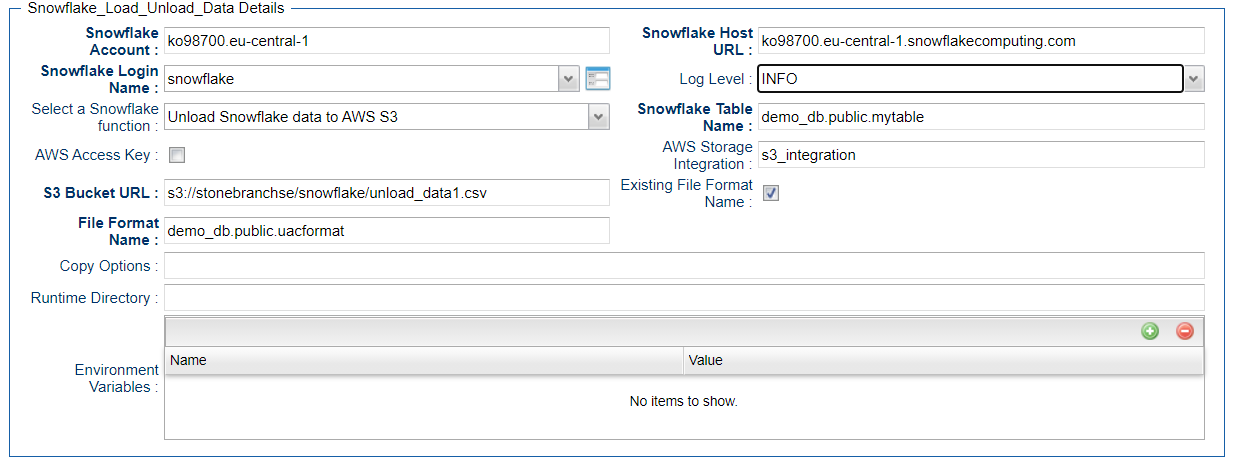

Unload Snowflake data to AWS S3 | This feature helps to unload the data from a Snowflake table to a AWS S3 bucket, file format options and copy options can be provided appropriately. |

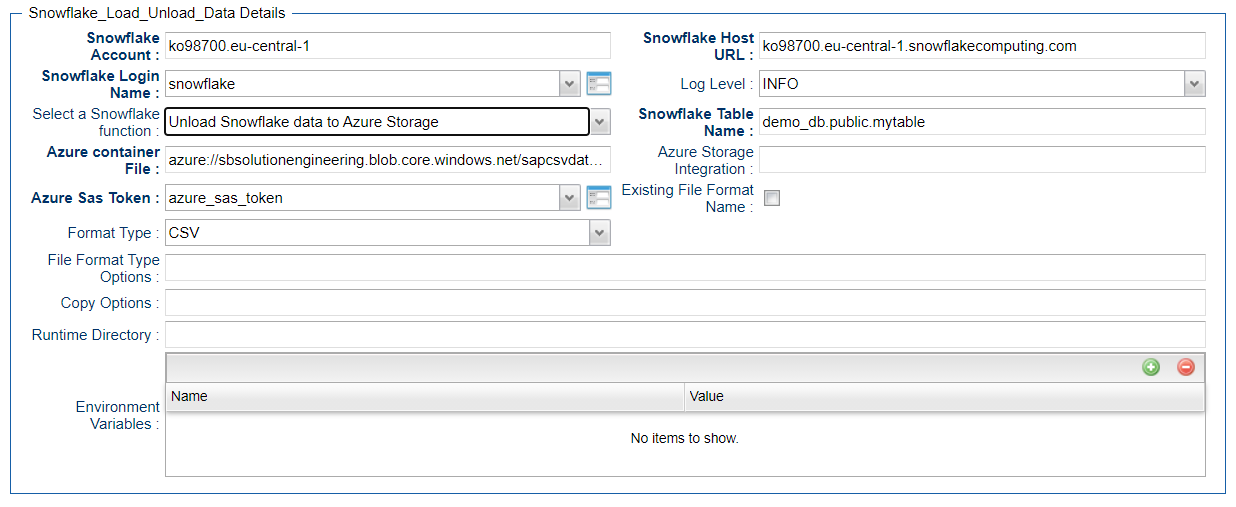

Unload Snowflake data to Azure Storage | This feature helps to unload the data from a Snowflake table to an Azure container; file format options and copy options can be provided appropriately. |

Unload Snowflake data to Google Storage | This feature helps to unload the data from a Snowflake table to a Google cloud Storage; file format options and copy options can be provided appropriately. Also, this would need an storage integration name from Snowflake. |

Unload Snowflake data to Internal stage | Unloads the Snowflake table into an internal staging area in Snowflake. |

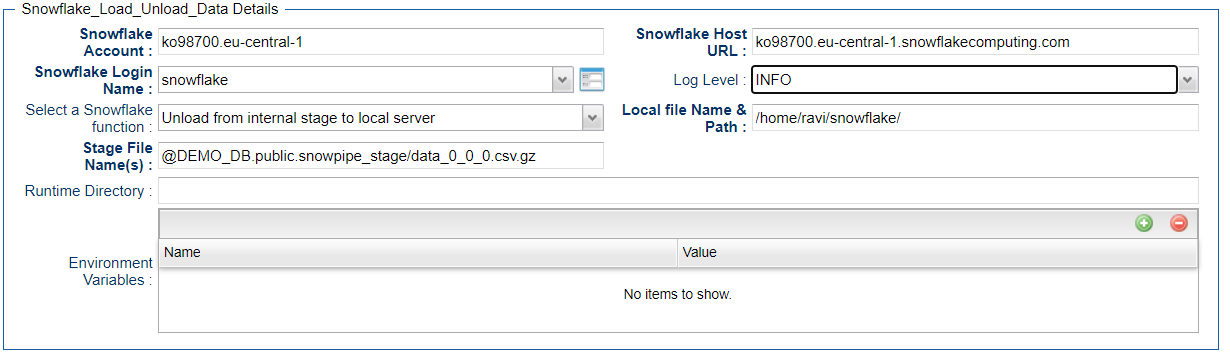

Unload from internal stage to local server | This feature helps to copy the files in staging area to a local windows or a Linux server. |

Execute a Snowflake command | Users can use this feature to execute snowflake commands; for example: Copy, Remove, Select, Delete, etc. |

Import Snowflake Integration Downloadable Universal Template

To use this downloadable Universal Template, you first must perform the following steps:

- This Universal Task requires the Resolvable Credentials feature. Check that the Resolvable Credentials Permitted system property has been set to true.

- To import the Universal Template into your Controller, follow the instructions here.

- When the files have been imported successfully, refresh the Universal Templates list; the Universal Template will appear on the list.

Configure Snowflake Integration Universal Task

For the new Universal Task type, create a new task, and enter the task-specific details that were created in the Universal Template.

Field Descriptions for Snowflake Integration Universal Task

Field | Description |

Snowflake Account | Provide the Snowflake user account; for example, sr14548.eu-central-1. |

Snowflake Host URL | Specify your host information in the form of a URL; for example, sr14548.eu-central-1.snowflakecomputing.com. |

Snowflake Login Name | Specify your Snowflake login name.. |

| Warehouse | Specify the warehouse name; If not specified, connects to the default warehouse for the user. |

Log Level | Select a log level. |

Select a Snowflake function | Select the required Snowflake function (if the required function not available, check the SQL task to invoke snowflake DB). |

Snowflake Command | Provide a Snowflake command either in SQL or put / get commands etc. |

Local file Name & Path | Provide the local file name that need to be copied to stage. |

Stage Name | Provide the stage(internal) name in Snowflake. |

Encrypted Private Key | Select only if you have a encrypted private key. |

Stage File Name(s) | Provide the stage file names; if there are multiple files, separate by comma. |

Pipe Name | Specify the fully-qualified name of the pipe to use to load the data. |

Private Key File Path | Provide the private key file path to establish connection to Snowflake for data ingest. |

Private Key Password | Provide the password for private if it is encrypted. |

Snowflake Table Name | Provide the full path and the Snowflake table name where the table to be loaded. |

Use AWS Credentials | Check this if you need to supply AWS access key credentials. |

AWS Key ID & Secret Access Key | Provide the AWS secret Access Key (runtime user AWS Key id and secret key in the password section). |

AWS Storage Integration | Provide the name of the AWS storage integration created in Snowflake. |

Load using Pattern | If you need load the data using pattern, check this option. |

S3 Bucket URL | Provide your S3 bucket URL s3://<your_s3_bucket>/data/. |

Azure container File | Provide the Azure container file URL starting with azure:// |

Azure Storage Integration | Provide the Azure storage integration created in Snowflake. |

GCP storage URL | Provide the bucket name and the file name; for example, gcs://mybucket/data/files. |

Azure Sas Token | Provide the Azure Sas Token. |

Existing File Format Name | Specify an existing named file format to use for loading data into the table. |

Storage Integration | Provide the Snowflake storage integration details. |

Pattern | Regular expression pattern string - specifying the file names and/or paths to match. |

File Format Name | Specifies an existing named file format to use for loading data into the table. |

Format Type | Specify the format type: CSV | JSON | AVRO | ORC | PARQUET | XML. |

File Format Type Options | Specify the file format type options; for example, FIELD_DELIMITER = '|' if CSV type -- Refer to https://docs.snowflake.com/en/sql-reference/sql/copy-into-table.html |

Copy Options | Include other copy options; for example, ON_ERROR = CONTINUE or FORCE=TRUE- Refer to https://docs.snowflake.com/en/sql-reference/sql/copy-into-table.html |

Polling Interval (Secs) | Provide the Polling Interval time in Seconds, in the case of data ingestion from internal staging to Snowflake Table. |

Number of times to Poll | Specify the number of times to poll; otherwise, default is set to 50, in the case of data ingestion from internal staging to Snowflake Table. |

Examples for Snowflake Integration Universal Tasks

Load Data from Azure Storage to Snowflake Table

Load Data from AWS S3 to Snowflake Table

Load Data from Google Cloud Storage to Snowflake Table

Copy Local File to Snowflake Staging

Load Snowflake Staging File to Table

Unload Snowflake Table to AWS S3 Storage

Unload Snowflake Table to Azure Storage

Download Snowflake Stage File to Local Linux Server

Copy Multiple Files (Linux Server) to Snowflake Staging Area

Executing a Snowflake Command

Snowflake Universal Task Functions

Document References

This document references the following documents:

Name | Description |

|---|---|

User documentation for creating Universal Templates in the Universal Controller user interface. | |

User documentation for creating Universal Tasks in the Universal Controller user interface. |

%20to%20SNowflake%20Staging%20Area%20-%206.9.0.0.png?version=1&modificationDate=1636391240000&cacheVersion=1&api=v2)